Abstract

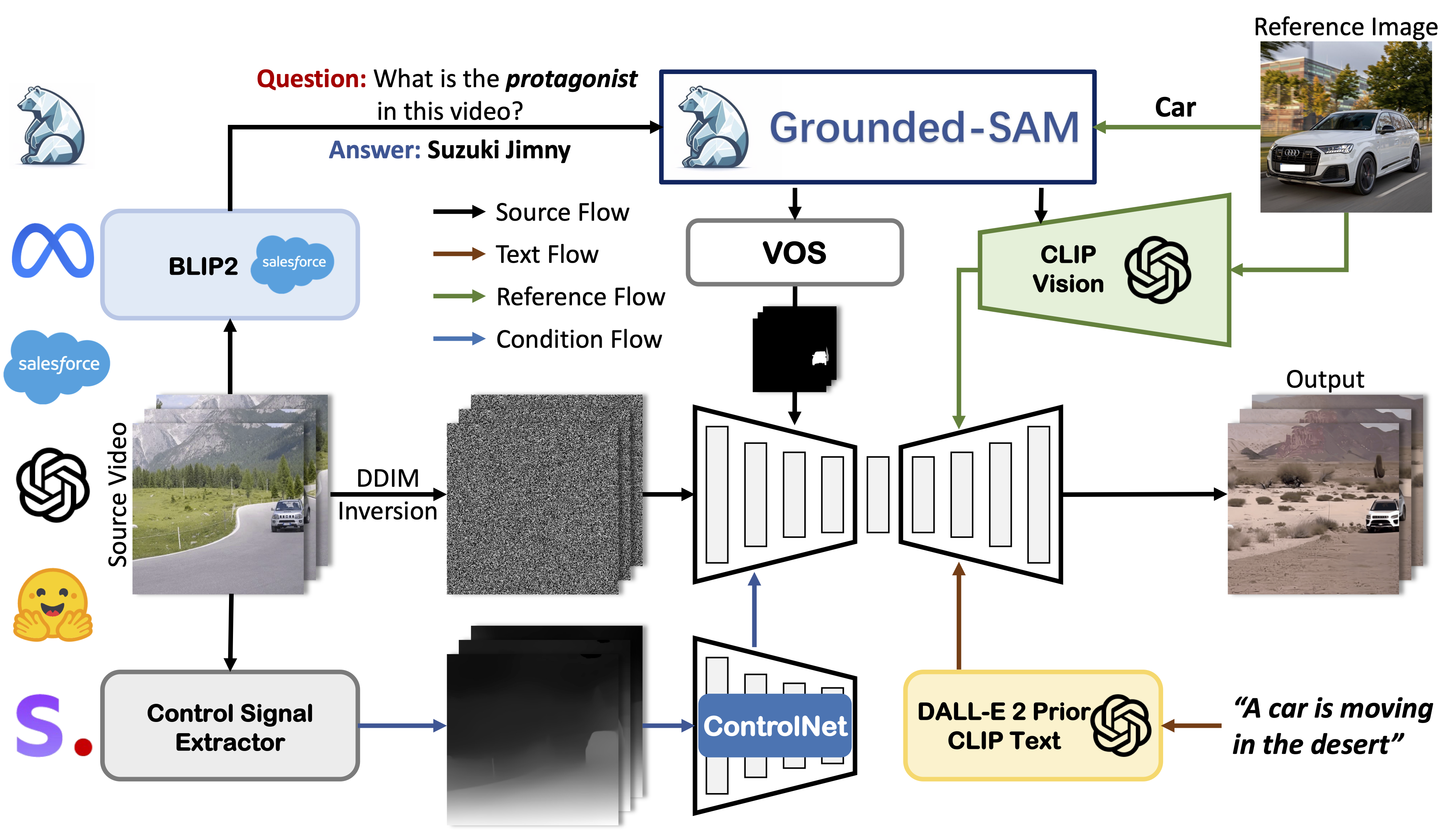

The text-driven image and video diffusion models have achieved unprecedented success in generating realistic and diverse content. Recently, the editing and variation of existing images and videos in diffusion-based generative models have garnered significant attention. However, previous works are limited to editing content with text or providing coarse personalization using a single visual clue, rendering them unsuitable for indescribable content that requires fine-grained and detailed control. In this regard, we propose a generic video editing framework called Make-A-Protagonist, which utilizes textual and visual clues to edit videos with the goal of empowering individuals to become the protagonists. Specifically, we leverage multiple experts to parse source video, target visual and textual clues, and propose a visual-textual-based video generation model that employs mask-guided denoising sampling to generate the desired output. Extensive results demonstrate the versatile and remarkable editing capabilities of Make-A-Protagonist.

Introduction

Method

The first framework for generic video editing with both visual and textual clues.

Results

Text-to-Video Editing with Protagonist

|

|

|

|---|---|---|

"A man playing basketball" |

"A man playing basketball on the beach, anime style" |

|

|

|

|---|---|---|

"A girl in white dress dancing on a bridge" |

"A girl dancing on the beach, anime style" |

|

|

|

|---|---|---|

"A man dancing in a room" |

"A man in dark blue suit with white shirt dancing on the beach" |

|

|

|

|---|---|---|

"A red macaw flying over a river" |

"An eagle flying in the city" |

|

|

|

|---|---|---|

"A man walking down the street" |

"A panda walking down the snowy street" |

|

|

|

|---|---|---|

"A Suzuki Jimny driving down a mountain road" |

"A car moving in the desert" |

|

|

|

|---|---|---|

"A drift car driving down a track" |

"A car driving down a track in the snow" |

|

|

|

|

|---|---|---|---|

"A man riding a dirt bike through a river" |

"A man riding a motorbike in the snow, anime style" |

Protagonist Editing

Background Editing

|

|

|

|---|---|---|

"A man playing basketball" |

"A man playing basketball in the forest" |

|

|

|

|---|---|---|

"A drift car driving down a track" |

"A drift car driving down a track at night" |

|

|

|

|---|---|---|

"A Suzuki Jimny driving down a mountain road" |

"A Suzuki Jimny driving down a mountain road in the rain" |

|

|

|

|---|---|---|

"A red macaw flying over a river" |

"A red macaw flying over the hills in the snow" |

Bibtex